Posts

-

Get RDS Instance Type RAM in Terraform

For a monitoring project I’m currently working on I need to get the amount of RAM an RDS Instance has. Unfortunately the aws_db_instance resource doesn’t expose this type of information to us. Terraform does however give us an external datasource that we can take advantage of to provide the data.

-

Why doesn't SELinux log a denied message?

If you’re wondering why SELinux is not printing a “denied” message in /var/log/audit/audit.log it’s probably because somebody wanted to hide it! Yes, it’s possible to prevent an SELinux module from logging a denied message. Disable this behaviour by executing the following command:

-

Terraform will damage your Computer

Today I experienced the following error on my Intel MacBook Pro when attempting to run Terraform…

-

Generate BATS Unit Tests with ChatGPT

Of course everyone has now heard about ChatGPT. I’ve seen a few posts on LinkedIn where people have used it to generate code. I thought I’d have a quick go at seeing if ChatGPT would generate useful units tests. So I gave it the following bash function…

-

Install PyLint on Python 2.7

When installing pylint with Python 2.7 you may encounter the following problem…

-

log4j vulnerability scanner

WARNING: As with any tool you download from the Internet…

- Check the source code.

- Don’t run as root or other high-privileged user.

- Run only in a test system and never in prod.

- Keep out of reach of Children.

-

FizzBuzz with Python

Why? Simply because I haven’t attempted the FizzBuzz problem for some time!

-

Wordpress to GitHub Pages Migration

I’ve just completed the migration of a Wordpress blog to GitHub pages using the Ruby based Jekyll blogging engine. The following resource was very useful…

-

Welcome to Jekyll!

You’ll find this post in your

_postsdirectory. Go ahead and edit it and re-build the site to see your changes. You can rebuild the site in many different ways, but the most common way is to runjekyll serve, which launches a web server and auto-regenerates your site when a file is updated. -

How to tell if you're in a docker container

Sometimes you need to know if you’re inside a docker container from the shell. Here’s how you can do that..

-

Disable line-length Yamllint rule in Molecule

Just a quick post on how to disable the yamllint line-length rule in molecule tests (I’m always forgetting).

-

Ignore PEP rules with Molecule / Testinfra / flake8

I’m always forgetting how to configure my molecule.yml file to ignore certain PEP8 rules. Here’s a quick example showing how to ignore the E501 Line too long rule:

-

Using Ansible Modules with Testinfra

I’ve been looking at improving the quality of the testing I do with Molecule and Testinfra. Simple checks like service.is_running or package.is_installed have their place but they’re pretty limited as to what assurances they provide us. Part of the issue I have is that some tests need a fair bit of setup to make them worthwhile. Attempting to tackle that with raw Python might be a little bit tricky. A better approach is to use the Ansible module available within TestInfra. We can call an ansible module with the following Python code:

-

Using Bash brace expansion to generate multiple files

I needed to generate a whole bunch of files, with identical content, for a recent task. You might automatically think of using a loop for such a task but there’s a much simpler method using brace expansion in the shell.

-

Linux Server checks with Goss

I’ve been playing a little with goss recently. Goss is similar to TestInfra in that it allows you to write tests to validate your infrastructure. Goss uses yaml to specify the expected state rather than python code unittests like Testinfra. It also has a couple of other interesting features making it stand out from the crowd…

-

Wait for processes to end with Ansible

I’ve been doing a lot in stuff in ansible recently where I needed to fire up, kill and relaunch a bunch of processes. I wanted to find a quick and reliable way of managing this…

-

Broken sudo?

If you somehow add a dodgy sudo rule you might end up breaking it completely…

-

Linux: Reclaim disk space used by "deleted" files

I had a misbehaving application consuming a large amount of space in /tmp. The files were not visible in the /tmp volume itself but lsof allowed me to identify them.

-

ansible-vault unexpected exception on Ubuntu

When attempting to edit an ansible-vault file…

-

"could not open session" error in docker container

I received the following error, attempting to cat a log file, inside a docker contain when troubleshooting another issue…

-

Ansible: stop / start services on random hosts

In the coming weeks I’m performing some testing of a new application on a Cassandra cluster. To add a little randomness into some of the tests I thought it would be interesting to give the Cassandra service a little kick. I created a simple Ansible playbook this afternoon that does this. A simple Chaos Monkey if you like. Here’s the basic flow of the playbook…

-

Use restview to to make the Ansible rst documentation browsable

The ansible-doc package not only installs the command line tool but also some quite detailed Ansible documentation in rst format. It would be nice if it was browsable in a html format. Here’s how that can happen (Redhat/CentOS)

-

Create a space-separated list of play_hosts in Ansible

Sometimes I need a list of hosts as a string when working with Ansible. Pacemaker clustering is one example. Here’s a snippet of Ansible that does this..

-

Offset cron jobs with Ansible

Sometimes I want to run the same cronjob on a few hosts but I might want to offset them slightly if I’m accessing any shared resources. Here’s an easy way to do that, for a small number of hosts, using Ansible…

-

ssh-copy-id automation with a list of hosts

Here’s another version of my ssh-copy-id script this time using a text file containing a list of hosts. The hosts file should contain a single host per line.

-

Creating a Vagrant, Virtualbox & Ansible environment in the Windows Linux Subsystem

I’ve just been given a new Windows corporate laptop, with a huge amount of RAM (64GB), a large number of cores, and I wanted to start using this as my main development virtualisation platform. I do a lot of stuff with Vagrant, Ansible and VirtualBox and Windows hasn’t always been a welcome home for this setup. A more welcoming experience can be received through the Windows Linux Subsystem (WLSS) and is a big improvement over Cygwin. The instructions here used Debian 9.5 but should work on many other Linux distributions with minor modifications (i.e. package manager).

-

Vagrant: Create a series of VMs from a hostname array

I couldn’t find any examples of creating VMs from a array of strings online so sat down to work something out myself. Here’s how you do it…

-

Automate ssh-copy-id with numbered hosts

Here’s a script I use to automate ssh-copy-id when I need to add a series of hosts using a incremental node number. For example…

-

Ansible Playbook for Raspberry Pi Headphones Setup

I’ve created another Ansible Playbook for the Raspberry Pi to setup Headphones. It’s hosted over on my Github: PiHeadphones

-

Ansible: Find files newer than another

I needed to figure out a way of identifying files newer than another one in Ansible. Here's an outline of the solution I came up with.

First we need to create a bunch of directories and folder, with modified mtime values, that we can work with.

mkdir dir1; mkdir dir2; # Set the time on this dir to 3 days ago touch -t $(date -v-3d +'%Y%m%d%H%M') dir1; -

Ansible Raspberry Pi Projects

I’ve been playing around with a few RaspberryPi units and thought I’d share the Ansible projects I’ve created here. All these Ansible roles are intended for the Raspian OS.

-

Setting up a Ansible Module Test Environment

I’ve begun developing some Ansible modules and have created a Vagrant environment to help with testing. You can check it over over on my github. The environment has been created to test some MongoDB modules but can easily be repurposed to another use. It’s quite simple to get started;

-

Getting JConsole working over ssh with Cassandra

I’ve been working with Cassandra recently and wanted to start using JConsole. JConsole exposes some pretty useful information about Cassandra and the Java environment in which it runs. It operates over JMX and by default it only accepts connections from the localhost. Since we don’t run GUIs on our servers and we didn’t want to open up the JMX service to remote connections we needed to do a little wizardry to connect. First I thought of tunneling over ssh;

-

Jenkins test instance with Vagrant & Ansible

Here’s yet another project using Vagrant and Ansible. This time it’s for a Jenkins instance. To get started head on over to the Jenkins test instance githubpage. Consult the readme for setup instructions.

-

AWX installation using Vagrant and Ansible

Over on my github is a new project firing up a test instance of AWX. This is based on the following awx installation notes. The project is AWX-on-CentOS-7. You’ll need Virtualbox, Vagrant and Ansible installed to get this up and running. Getting started is simple;

-

Staged service restart with Ansible

I’ve been working on a small project to create a Cassandra Cluster for Development purposes. I’m using Vagrant and Ansible to deploy a 5-node Cassandra Cluster and node #5 would always fail to join the cluster.

-

MySQL 5.7: root password is not in mysqld.log

I came across this issue today when working on an ansibleplaybook with MySQL 5.7. Old habits die hard and I was still trying to use mysql_install_db to initialise my instance. It seems a few others have been doing the same. The effect of using mysql_install_db in more recent version of MySQL is that we end up not knowing the root password. This is now set to a random value rather than being blank/unset. Nothing is logged to the mysqld.log file unless you use mysqld –initialize first;

-

A Cassandra Cluster using Vagrant and Ansible

I’ve started a new project to create a Cassandra Cluster for development purposes. It’s available on my github and uses Vagrant, Ansible, and VirtualBox.

-

Using avahi / mDNS in a Vagrant project

I’m working on a project, with Vagrant and Ansible, to deploy a MongoDB Cluster. I needed name resolution to function between the VirtualBox VMs I was creating and didn’t want to hardcode anything in the hosts file. The solution I decided on uses avahi which essentially works like Apple Bonjour. As this solution has broader applications than just a MongoDB cluster I thought I’d share it here. The script is idempotent and is for Redhat/CentOS systems.

-

Cassandra 3 Node Cluster Setup Notes

Install on each node

-

A Clone of the STRING_SPLIT MSSQL 2016 Function

I have recently been developing some stuff using MSSQL 2016 and used the STRING_SPLIT function. This doesn’t exist in earlier versions and I discovered I would be required to deploy to 2008 or 2012. So here’s a my own version of the STRING_SPLIT function I have developed and tested on MSSQL 2008 (may also work on 2005).

-

A simple MariaDB deployment with Ansible

Here's a simple Ansible Playbook to create a basic MariaDB deployment.

The basic steps the playbook will attempt are:

- Install a few libraries

- Setup Repos

- Install MariaDB packages

- Install Percona software

- Create MariaDB directories

- Copy my.cnf to server (note this is a template file and not supplied here)

- Run mysql_install_db if needed

- Start MariaDB

- Set root password

- Delete anonymous users

- Create myapp database and user

Note: some steps will only execute if a root password has not been set. These are identifiable by the following line:

when: is_root_password_set.rc == 0

This is the playbook in full:

-

A dockerized mongod instance with authentication enabled

Here’s just a quick walkthrough showing how to create a dockerized instance of a standalone MongoDB instance.

-

Check MariaDB replication status inside Ansible

I needed a method to check replication status inside Ansible. The method I came up with uses the shell module...

-

my: a command-line tool for MariaDB Clusters

I’ve posted the code for my MariaDB Cluster command-line tool called my. It does a bunch of stuff but the main purpose is to allow you to easily monitor replication cluster-wide while working in the shell.

-

Notes from the field: CockroachDB Cluster Setup

Download the CockroachDB Binary

-

MongoDB: Making the most of a 2 Data-Centre Architecture

There’s a big initiative at my employers to improve the uptime of the services we provide. The goal is 100% uptime as perceived by the customer. There’s obviously a certain level of flexibility one could take in the interpretation of this. I choose to be as strict as I can about this to avoid any disappointments! I’ve decided to work on this in the context of our primary MongoDB Cluster. Here is a logical view of the current architecture, spread over two data centres;

-

MongoDB and the occasionally naughty query

It’s no secret that databases like uniqueness and high cardinality. Low cardinality columns do not make good candidates for indexes. A recent issue I had with MongoDB proved that NoSQL is no different in this regard.

-

A few Splunk queries for MongoDB logs

Here’s a few Splunk queries I’ve used to supply some data for a dashboard I used to manage a MongoDB Cluster.

-

Getting started with CockRoachDB

I've been quite interested in CockRoachDB as it claims to be "almost impossible to take down".

Here's a quick example for setting up a CockRoachDB cluster. This was done on a mac but should work with no, or minimal, modifications on *nix.

First, download and set the path PATH

wget https://binaries.cockroachdb.com/cockroach-latest.darwin-10.9-amd64.tgz tar xvzf cockroach-latest.darwin-10.9-amd64.tgz PATH="$PATH:/Users/rhys1/cockroach-latest.darwin-10.9-amd64"; export PATH;

Setup the cluster directories...

mkdir -p cockroach_cluster_tmp/node1; mkdir -p cockroach_cluster_tmp/node2; mkdir -p cockroach_cluster_tmp/node3; mkdir -p cockroach_cluster_tmp/node4; mkdir -p cockroach_cluster_tmp/node5; cd cockroach_cluster_tmp

Fire up 5 CockRoachDB hosts...

cockroach start --background --cache=50M --store=./node1; cockroach start --background --cache=50M --store=./node2 --port=26258 --http-port=8081 --join=localhost:26257; cockroach start --background --cache=50M --store=./node3 --port=26259 --http-port=8082 --join=localhost:26257; cockroach start --background --cache=50M --store=./node4 --port=26260 --http-port=8083 --join=localhost:26257; cockroach start --background --cache=50M --store=./node5 --port=26261 --http-port=8084 --join=localhost:26257;

You should now be able to access the Cluster web-console at http://localhost:8084.

Command-line access is achieved with...

cockroach sql;

Those familiar with sql will be comfortable...

root@:26257/> CREATE DATABASE rhys; root@:26257/> SHOW DATABASES; root@:26257/> CREATE TABLE rhys.test (id SERIAL PRIMARY KEY, text VARCHAR(100) NOT NULL); root@:26257/> INSERT INTO rhys.test(text) VALUES ('Hello World'); root@:26257/> SELECT * FROM rhys.test;Any data you insert should be replicated to all nodes. You can check this with...

cockroach sql --port 26257 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26258 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26259 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26260 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26261 --execute "SELECT COUNT(*) FROM rhys.test";

We can also insert into any of the nodes...

cockroach sql --port 26257 --execute "INSERT INTO rhys.test (text) VALUES ('Node 1')"; cockroach sql --port 26258 --execute "INSERT INTO rhys.test (text) VALUES ('Node 2')"; cockroach sql --port 26259 --execute "INSERT INTO rhys.test (text) VALUES ('Node 3')"; cockroach sql --port 26260 --execute "INSERT INTO rhys.test (text) VALUES ('Node 4')"; cockroach sql --port 26261 --execute "INSERT INTO rhys.test (text) VALUES ('Node 5')";Check the counts again...

cockroach sql --port 26257 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26258 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26259 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26260 --execute "SELECT COUNT(*) FROM rhys.test"; cockroach sql --port 26261 --execute "SELECT COUNT(*) FROM rhys.test";

Check how the data looks on each node...

cockroach sql --port 26261 --execute "SELECT * FROM rhys.test";

+--------------------+-------------+ | id | text | +--------------------+-------------+ | 226950927534555137 | Hello World | | 226951064182259713 | Hello World | | 226951080098856961 | Hello World | | 226952456016003073 | Node 1 | | 226952456149368834 | Node 2 | | 226952456292663299 | Node 3 | | 226952456455684100 | Node 4 | | 226952456591376389 | Node 5 | +--------------------+-------------+ (8 rows)

cockroach sql --port 26260 --execute "SELECT * FROM rhys.test";

+--------------------+-------------+ | id | text | +--------------------+-------------+ | 226950927534555137 | Hello World | | 226951064182259713 | Hello World | | 226951080098856961 | Hello World | | 226952456016003073 | Node 1 | | 226952456149368834 | Node 2 | | 226952456292663299 | Node 3 | | 226952456455684100 | Node 4 | | 226952456591376389 | Node 5 | +--------------------+------------- + (8 rows)

-

The blame game: Who deleted that file? Working with auditd

I've recently had an issue where a file was disappearing that I couldn't explain. Without something to blame it on I search for a method to log change to file and quickly found audit. Audit is quite extensive and can capture a vast array of information. I'm only interested in monitoring a specific file here. This is for Redhat based systems.

First you'll need to install / configure audit if it's not already;

yum install audit

Check the service is running...

service auditd status

Let's create a dummy file to monitor...

echo "Please don't delete me\!" > /path/to/file/rhys.txt;

Add a rule to audit for the file. This adds a rule to watch the specified file with the tag *whodeletedmyfile*.

auditctl -w /path/to/file/rhys.txt -k whodeletedmyfile

You can search for any records with;

ausearch -i -k whodeletedmyfile

The following information will be logged after you add the rule;

---- type=CONFIG_CHANGE msg=audit(02/02/2017 13:09:59.967:226727) : auid=user@domain.local ses=12425 op="add rule" key=whodeletedmyfile list=exit res=yes

Now let's delete the file and search the audit log again;

rm /path/to/file/rhys.txt && ausearch -i -k whodeletedmyfile

We'll see the following information;

---- type=CONFIG_CHANGE msg=audit(02/02/2017 13:09:59.967:226727) : auid=user@domain.local ses=12425 op="add rule" key=whodeletedmyfile list=exit res=yes ---- type=PATH msg=audit(02/02/2017 13:10:26.939:226735) : item=1 name=/path/to/file/rhys.txt inode=42 dev=fd:04 mode=file,644 ouid=root ogid=root rdev=00:00 nametype=DELETE type=PATH msg=audit(02/02/2017 13:10:26.939:226735) : item=0 name=/path/to/file/ inode=28 dev=fd:04 mode=dir,700 ouid=user@domain.local ogid=user@domain.local rdev=00:00 nametype=PARENT type=CWD msg=audit(02/02/2017 13:10:26.939:226735) : cwd=/root type=SYSCALL msg=audit(02/02/2017 13:10:26.939:226735) : arch=x86\_64 syscall=unlinkat success=yes exit=0 a0=0xffffffffffffff9c a1=0xf9a0c0 a2=0x0 a3=0x0 items=2 ppid=27157 pid=27604 auid=user@domain.local uid=root gid=root euid=root suid=root fsuid=root egid=root sgid=root fsgid=root tty=pts0 ses=12425 comm=rm exe=/bin/rm key=whodeletedmyfile

-

Working with the PlanCache in MongoDB

I’ve been working a little with the PlanCache in MongoDB to troubleshoot some performance problems we’ve been experiencing. The contents of the Plan Cache are json documents (obviously) and this isn’t great to work with in the shell. Here’s a couple of javascript functions I’ve come up with to make things a little easier.

-

InfluxDB: Bash script to launch and configure two nodes

I've just created a quick bash script because I"m working a little with InfluxDB at the moment. InfluxDB is a time series database written in GO.

The script will setup two influxdb nodes, setup some users and download and load some sample data. It's developed on a Mac but should work in Linux (not tested yet but let me know if there's any problem). I do plan further work on this, for example adding in InfluxDB-Relay. The script is available at my github.

Usage is as follows...

Source the script in the shell

. influxdb_setup.sh

This makes the following functions available...

influx_kill influx_run_q influx_admin_user influx_launch_nodes influx_setup_cluster influx_config1 influx_mkdir influx_stress influx_config2 influx_murder influx_test_db_user_perms influx_count_processes influx_noaa_db_user_perms influx_test_db_users influx_create_test_db influx_noaa_db_users influx_curl_sample_data influx_node1 influx_http_auth influx_node2 influx_import_file influx_remove_dir

You don't need to know in detail what most of these do. To setup two nodes just do...

influx_setup_cluster

If all goes well you should see a message like below...

Restarted influx nodes. Logon to node1 with influx -port 8086 -username admin -password $(cat "${HOME}/rhys_influxdb/admin_pwd.txt")Logon to a node with...

influx -port 8086 -username admin -password $(cat "${HOME}/rhys_influxdb/admin_pwd.txt")Execute "show databases"...

name ---- test NOAA_water_database _internal

Execute "show users"...

user admin ---- ----- admin true test_ro false test_rw false noaa_ro false noaa_rw false

N.B. Password for these users can be found in text files in $HOME/rhys_influxdb/

Start working with some data...

SELECT * FROM h2o_feet LIMIT 5 name: h2o_feet time level description location water_level ---- ----------------- -------- ----------- 1439856000000000000 between 6 and 9 feet coyote\_creek 8.12 1439856000000000000 below 3 feet santa\_monica 2.064 1439856360000000000 between 6 and 9 feet coyote\_creek 8.005 1439856360000000000 below 3 feet santa\_monica 2.116 1439856720000000000 between 6 and 9 feet coyote\_creek 7.887

-

mongodb_consistent_backup: A quick example

Just a quick post here to share some notes I made when using the mongodb_consistent_backup tool.

-

A quick mongofile demo

Here’s a few simple examples of using the mongofiles utility to use MongoDB GridFS to store, search and retrieve files.

-

Bash: Count the number of databases in a gzip compressed mysqldump

A simple bash one-liner!

-

Getting started with osquery on CentOS

I recently stumbled across osquery which allows you to query your Linux, and OS X, servers for various bits of information. It's very similar in concept to WQL for those in the Windows world.

Here's my quick getting started guide for CentOS 6.X...

First download and install the latest rpm for your distro. You might want to check osquery downloads for the latest release.

wget https://osquery-packages.s3.amazonaws.com/centos6/osquery-1.8.2.rpm sudo rpm -ivh osquery-1.8.2.rpm

You'll now have the following three executables in your path

- osqueryctl - bash script to manage the daemon.

- osqueryd - the daemon.

- osqueryi - command-line client to interactively run osquery queries, view tales (namespaces) and so on.

Take a look at the example config...

cat /usr/share/osquery/osquery.example.conf

The daemon won't start without a config file so be sure to create one first. This config file does a few thigns but will also periodcially run some queries and log them to a file. This is useful for sticking data into ELK or splunk.

cat << EOF > /etc/osquery/osquery.conf { "options": { "config_plugin": "filesystem", "logger_plugin": "filesystem", "logger_path": "/var/log/osquery", "pidfile": "/var/osquery/osquery.pidfile", "events_expiry": "3600", "database_path": "/var/osquery/osquery.db", "verbose": "true", "worker_threads": "2", "enable_monitor": "true" }, // Define a schedule of queries: "schedule": { // This is a simple example query that outputs basic system information. "system_info": { // The exact query to run. "query": "SELECT hostname, cpu_brand, physical_memory FROM system_info;", // The interval in seconds to run this query, not an exact interval. "interval": 3600 } }, // Decorators are normal queries that append data to every query. "decorators": { "load": [ "SELECT uuid AS host_uuid FROM system_info;", "SELECT user AS username FROM logged_in_users ORDER BY time DESC LIMIT 1;" ] }, "packs": { "osquery-monitoring": "/usr/share/osquery/packs/osquery-monitoring.conf" -

Remove an _id field from a mongoexport json document

Although the mongoexport tool has a –fields option it will always include the _id field by default. You can remove this with a simple line of sed. This was slightly modified from this sed expression.

-

mmo: Getting started

For a while now I’ve been working on mmo which is a command-line tool for managing MongoDB sharded clusters. It’s about time I did a release and hopefully get some feedback.

-

RPM Query on multiple servers with Python & Fabric

I’ve been playing a bit with fabric to make some of my system administration and deployment tasks easier. As the number of servers I manage increases I need to get smarter at managing them. Fabric fills that gap nicely.

-

Fork of Nagios custom dashboard

I was looking for something to create custom dashboards in Nagios and came across this. The approach taken here limited each user to a single dashboard but also the method for getting the logged in user didn’t work in my environment. So I decided to fork it…

-

MariaDB: subquery causes table scan

I got asked today to look at some slow queries on a MariaDB 10 instance. Here are the anonymized results of the investigation I did into this and how I solved the issue…

-

emo: Launch an elasticsearch cluster

I’m getting a bit more into elasticsearch and I’ve started up a github project to contain some of work. This project will be similar to mmo: Python library for MongoDB and can be found at emo. The project will again be in python and will basically be a bunch of methods for exploring and managing an elasticsearch cluster. There’s not much there at the moment. Just a bash script to launch a test elasticsearch cluster. Here’s how you could use it…

-

Delete all but the most recent files in Bash

I’ve been reviewing a few things I do and decided I need to be a bit smarter about managing backups. I currently purge by date only. Which is fine if everything is working and checked regularly. I wouldn’t want to return from a two week holiday to find my backups had been failing, nobody checked it, but the purge job was running happily.

-

Update on pymmo and demo app

Just a quick update on my pymmo project I started over on github. As I stated earlier this year I want to get deeper into Python and would be writing tools for MongoDB (and potentially other databases).

-

Recover a single table from a mysqldump

I needed to recover the data, from a single table, from a mysqldump containing all the databases from an entire instance. A quick google yielded this result. This produced a nifty little sed one-liner…

-

mmo: bash script to launch a MongoDB cluster

As I announced in my Technical Goals for 2016 I’m building tools for MongoDB with Python. My first published item is a bash script to create a MongoDB cluster. This cluster will be used to develop, and test, the tools against. It is not intended for any use other than this. The script lives over on my github account. I develop mainly on a mac but this should work on all major Linux distributions.

-

Mongo Query Mistakes

After years of writing SQL we sometimes think we know it all and treat MongoDB as “just another database”. While there are many similarities there’s a few thing to watch out for. Here’s a few mistakes you’ll want to avoid…

-

Technical Goals for 2016

Happy New Year!

-

Using the $lookup operator in MongoDB 3.2

I often loiter over on the MongoDB User Google Group and there was an interesting question posted the other day. The poster wanted to form a document like this from two collections (where foo is a document from another collection)…

-

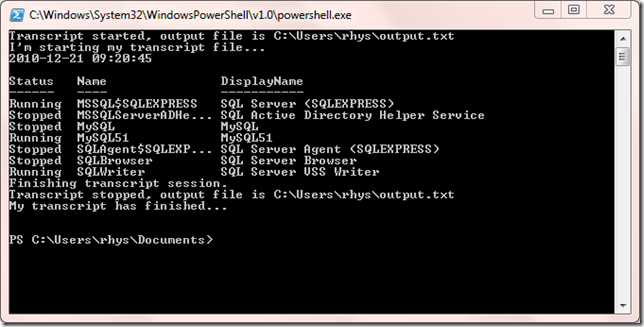

Progress bars in BASH with pv

Way back in 2009 I wrote a post about how to display progress bars in Powershell. The same thing is possible in bash with pv. If it’s not available in your shell just do…

-

Launch a MongoDB Cluster for testing

Here’s a bash script I use to create a sharded MongoDB Cluster for testing purposes. The key functions are mongo_setup_cluster and mongo_teardown_cluster. The script will created a Mongo Cluster with 2 shards, with 3 nodes each, 3 config server and 3 mongos servers.

-

Partitioning setup for Linux from Scratch in VirtualBox

I’ve finally taken the plunge and committed, to untarring and compiling, a bucket load of source code to complete Linux from Scratch. I’ll be documenting some of my setup here. I’m far from an expert, that’s why I’m doing this, but if you have any constructive criticism I’d be glad to hear it. I’m using VirtualBox and an installation of CentOS to build LFS.

-

Highlight text using Grep without filtering text out

Here’s a neat little trick I learned today I thought was worth sharing. Sometimes I want to highlight text in a terminal screen using grep but without filtering other lines out. Here’s how you do it…

-

MariaDB Compound Statements Outside Stored Procedures

It’s always been a small annoyance that the MySQL / MariaDB flavour of SQL wouldn’t allow you to use if else logic or loops outside of a stored procedure or trigger. There were ways around this but it’s not as nice if you’re coming from TSQL. This is rectified in MariaDB from 10.1.1.

-

TokuDB file & table sizes with information_schema

Here’s a few queries using the information_schema.TokuDB_fractal_tree_info to get the on disk size in MB for TokuDB tables.

-

6 Useful Bash tips I wish I knew from day zero

Here’s a few bash commands tricks I wished I’d been shown when I first picked up the shell. Please share any additional favorites you have.

-

The behavior of gtid_strict_mode in MariaDB

GTIDs in MariaDB are a nice improvement to replication and make fail-over a simpler process. I struggled a little with the explanation of gtid_strict_mode and what to expect. So I thought I’d run through a simple scenario to make my own understanding clear.

-

Better "read_only" slaves in MariaDB / MySQL

UPDATE: As of MySQL 5.7.8 there is super_read_only so use this instead of this trick.

-

Table & Tablespace encryption in MariaDB 10.1.3

Here’s just a few notes detailing my investigations into table & tablespace encryption in MariaDB 10.1.3.

-

Elasticsearch: Turn off index replicas

If you’re playing with elasticsearch on a single host you may notice your cluster health is always yellow. This is probably because your indexes are set to have one replica but there’s no other node to replicate it to.

-

Grok expression for MariaDB Audit Log

Here’s a grok expression for the MariaDB Audit Plugin Log. This has only been tested against CONNECT/DISCONNECT/FAILED_CONNECT events and will likely need modification for other event types.

-

Kibana splits on hostname

If you’re playing with Kibana and you notice any Pie charts splitting values incorrectly, i.e. on a hostname with hyphen characters, then here’s the fix you need to apply. It’s actually something elasticsearch does…

-

MyISAM key cache stats with information_schema.KEY_CACHES

Following on from a post last week on INNODB_BUFFER_PAGE queries I thought I’d look at the equivalent for the MyISAM key cache. The information_schema.KEY_CACHES is MariaDB only at the moment

-

innodb_buffer_page queries

If you want to get some high level statistics on the buffer pool in MySQL / MariaDB you can use the INNODB_BUFFER_POOL_STATS table in the information_schema database.

-

Missing InnoDB information_schema Views in MariaDB

While working on a MariaDB 10.0.14 instance today I noticed the INNODB_% tables were missing from information_schema. I could tell the InnoDB plugin was loaded.

-

Spotting missing indexes for MariaDB & MySQL

Here’s a query I use for MySQL / MariaDB to spot any columns that might need indexing. It uses a bunch of information_schema views to flag any columns that end with the characters “id” that are not indexed. This tests that the column is located at the head of the index through the ORDINAL_POSITION clause. So if it’s in an index at position 2, or higher, this won’t count.

-

Moving an InnoDB Database with FLUSH TABLES .. FOR EXPORT

If we wanted to move a large InnoDB database our options were very limited. Essentially we had to mysqldump the single database or move the entire tablespace. I did have an idea for moving a single InnoDB database by copying files but only ever tried it out with TokuDB. This method worked but seemed to frighten the developers so it’s something I never pursued beyond proof-of-concept.

-

A MariaDB Multi-Master setup example

Here’s a very quick example for how to setup Multi-Master replication with MariaDB. It’s light on detail here to focus only on the multi-master aspects of the setup. Have a good read of the documentation before attempting this. This example also uses GTIDs so you’ll need some understanding of these as well.

-

Identify Cross Database Foreign Keys

I’ve blogged before about cross-database foreign keys and what I think of them. I had a developer wanting to check for such references between two databases today. Here’s what I came up with to do this…

-

The Journey of a Mac Book Pro

I don’t normally post stuff like this but I thought it was quite interesting. The journey of my new Mac Book Pro is truly a reflection of the globalised world we now live in.

-

mysqldump: backing up specific tables

Here’s a quick example of how to backup specific MySQL / MariaDB tables and piping to xz to compress…

-

MariaDB's federatedx engine

I’ve been experimenting a little with the federatedx engine in MariaDB. For those of you coming from MSSQL think linked servers and that’s pretty much it, albeit with a few differences. Here’s a quick primer on the basics.

-

UNKNOWN STORAGE ENGINE 'FEDERATED'

You may receive the following error with MariaDB on a Windows platform when attempting to create a table using the federatedx engine.

-

Bash script to execute a MariaDB query multiple times

This simple bash script will execute a query 100 times against a MySQL instance. It also uses the time command to report how long the entire process took. I use this for some very simple bench-marking.

-

TSQL: Restore a multiple file backup

Just a follow up post using the backup files created in TSQL: Backup a database to multiple files. Here’s the script I used…

-

Copy date stamped backups with a regex & scp

Lets assume you have a directory of date stamped backups you want to scp to another location…

-

TSQL: Backup a database to multiple files

I wanted to see how much I could reduce backup times by specifying multiple files in theBACKUP TSQL command. Here’s a script I wrote to do this and I present a summary of the results below. The times are based on a database that produced a backup file(s) of approximately 51GB. You mileages will vary here based on a whole bunch of factors. Therefore consider these results illustrative and do your own testing.

-

Check a html page with check_http

With the check_http Nagios plugin we can check that a url returns an OK status code as well as verifying the page contains a certain string of text. The usage format is a s follows…

-

Modifying elasticsearch index settings

To view the settings of an index run the following at the command-line…

-

Removing logstash indicies from elasticsearch

I’ve been playing with EFK and elasticsearch ended up eating all of the RAM on my test system. I discovered this was due to it attempting to cache all these indexes. Since this is a test system I’m not too bothered about having a long history here so I wrote this bash script to remove logstash indexes from elasticsearch, compress and archive them. This has the effect of reducing the memory pressure and a better working system. Explanatory comments are included.

-

TSQL: Estimated database restore completion

Here’s a query proving you approximate percentage compeled, and estimated finish time, of any database restores happening on a SQL Server instance…

-

Monitoring fluentd with Nagios

Here’s just a few Nagios command strings you can use to monitor fluentd. I’ve thrown in a check for elasticsearch in case you’re monitoring an EFK system.

-

TSQL: Database Mirroring with Certificates

Here’s some more TSQL for the 70-462 exam. The script shows the actions needed to configure database mirroring using certificates for authentication. Explanatory notes are included but you’re likely to need the training materials for this to make sense. TSQL is not included for the backup/restore parts needed for database mirroring.

-

TSQL: Enable & Disable Logins and DENY connect

More notes for the 70-462 exam. This time we’re showing examples from ALTER LOGIN to enable and disable logins, as well a denying and granting the connect permission.

-

TSQL: When were databases last restored

Here’s a couple of queries I’ve modified from When was the last time your SQL Server database was restored?

-

TSQL: Database Permission Exercise for 70-462

Here’s the TSQL for an exercise, involving database permissions, from the 70-462. Explanatory comments are included.

-

TSQL: Create SQL Logins using certificates and asymmetric keys 70-462

Here’s some TSQL for creating sql logins using certificates and asymmetric keys. Explanatory comments are included.

-

TSQL: Partially Contained Databases 70-462

Here’s some TSQL for the Partially Contained Databases section of the 70-462. Explanatory comments are included.

-

EFK: Free Alternative to Splunk Using Fluentd

Here is an updated version of the instructions given at Free Alternative to Splunk Using Fluentd. The installation was performed in CentOS 6.5. 1. Install ElasticSearch

-

TSQL: User-Defined Server Roles 70-462

Just a little TSQL for the User-Defined Server Roles exercise in the 70-462 training materials. Explanatory comments are included.

-

Parsing Nagios log files with fluentd

Recently I’ve been experimenting with EFK to see how we can extract value from our machine logs. We also use Nagios to monitor various services and processes within our infrastructure. The text logs produces by Nagios are not very useful in their raw form as you can see…

-

Server Roles with TSQL

Here’s a few bits of TSQL you can use when working with Server-Level Roles in SQL Server 2012.

-

TSQL: Transparent Data Encryption exercise for 70-462

Here’s some TSQL for the WingTipToys2012 Transparent Data Encryption (TDE) exercise in the 70-462 training materials.

-

TSQL: Partitioned table exercise for 70-462

Here’s some TSQL for the WingTipToys2012 table partitioning exercise in the 70-462 training materials.

-

Installing Fluentd Using Ruby Gem

Here’s just a little update of the process found here, Installing Fluentd using Ruby Gem on OpenSuSE 12.1

-

Installing Analysis Service & Reporting Services from the command-line

Here’s just a few examples of installing Analysis Services and Reporting Services from the command-line.

-

Acitvating Windows 2008 R2 Server Core

From the command-line simply run…

-

TSQL: Restore a database without a log (ldf) file

I had to restore a bunch of databases missing a log file today. This was only a test server but I wanted to get it running as quickly as possible.

-

TSQL: Accuracy of DATETIME

Here’s something I didn’t know about the DATETIME data type in SQL Server….

-

TSQL: Table count per filegroup

Here’s a query that uses the SQL Server System Catalog Views to return a table count per table. I used this to check even table distribution in a data warehouse.

-

CentOS: clvmd startup timed out

I received the following error, on CentOS 6.5, when configuring a High Availability cluster. This also cause the computer to freeze on the os boot.

-

The database cannot be recovered because the log was not restored

A test restore of a SQL Server database had somehow been left in the “RESTORING” state. I attempted to bring the database online with

-

Adding ISCSI volumes to the nodes

This post is part of a series that will deal with setting up a MySQL shared storage cluster using VirtualBox & FreeNAS. In this post we deal with the setup of an iscsi volume on two nodes. The text in bold below represent commands to be executed in the shell.

-

Linux Cluster Node Configuration

Linux Node1

-

FreeNAS Configuration

This post is part of a series that will deal with setting up a MySQL shared storage cluster using VirtualBox & FreeNAS. This post deals with the configuration of FreeNAS.

-

Planning the cluster configuration

This post is part of a series that will deal with setting up a MySQL shared storage cluster using VirtualBox & FreeNAS. In this post we specify some brief details of the cluster configuration. Please note this post will be updated in the near future.

-

Aria Storage Engine Primer

I’m looking into HA MySQL at the moment. With these types of technologies you need to have a crash-safe storage engine in use. MyISAM just won’t cut it. While my long-term goal is to move to a fully-transactional storage engine, for example InnoDB, I’m looking at other possibilities.

-

Creating & Installing the CentOS cluster nodes.

This post is part of a series that will deal with setting up a MySQL shared storage cluster using VirtualBox & FreeNAS. In this post we deal with the installation of CentOS in VirtualBox.

-

Installing & Configuring a MySQL shared-storage Cluster

This post is meant as a index of posts dealing with the installation of a shared-storage MySQL cluster running within VirtualBox. I’m learning this stuff too so don’t assume this is the reference implementation. Feel free to point out any issues, or provide recommendations, and I’ll update the post and give you credit.

-

Installing FreeNAS in VirtualBox on OpenSuSE

This post is part of a series that will deal with setting up a MySQL shared storage cluster using VirtualBox & FreeNAS. In this post we deal with the installation of VirtualBox & FreeNAS.

-

Replace the Engine used in mysqldump

Just a little bash snippet to replace the ENGINE type used in a mysqldump. Slightly modified from this stackoverflow thread to perform the dump and replacement in a single step.

-

What permissions have your users really got?

Here’s a TSQL script to audit the permissions of certain AD users access to a SQL Server instance. This script uses the EXECUTE AS LOGIN clause and the system function sys.fn_my_permissions. All databases on the SQL Server instance are queried and the script will output results containing the assigned user permissions. To get started all you need to do is change the INSERT into #users to contain the users you want to audit.

Check out this post if you want to audit users in a particular AD group. It might save you a little more time. -

Audit database user & role mappings in SQL Server

This script provides you with a list, of the database user and database role mappings, for an entire SQL Server instance. The following system views are used;

-

Check the value of an Environmental Variable on Multiple servers

Here's one powershell method for how to check the value of an environment variable on multiple servers;

$computers = @("server1", "server2", "server3"); -

Correct a log file with too many VLFs

The what and why of this post is explained here Transaction Log VLFs – too many or too few?. Presented here is a quick practical example of how you might correct this issue in a database log file.

-

A quick example of why IN can be bad

Here’s just a quick demo that illustrates why the IN operator in TSQL might not perform as well as alternatives (like a range query or joining onto a temporary table containing your values).

-

Generate PK drops and creates using TSQL

Here’s just a couple of queries I used to generate PK drops and creates using the sys.key_constraints view. I wanted to do this for a database using Poor Mans Partitioning.

-

What's protected from accidental deletion in Active Directory?

Here's just a little tip I picked up from a presentation by Mark Broadbent (retracement on twitter). I'm the guy who wasn't listening! Mark stressed the importance of enabling the Protection from accidental deletion property in active directory for your Failover Clusters. Here's how to check this for Computers with Powershell.

This string of commands requires the AD Module. So if you're not using Powershell 3.0 you need to ensure this is loaded.

I've included a further filter in the Where-Object cmdlet because I'm only interested in SQL Servers. Remove or adjust this if needed.

Get-ADObject -Filter {ObjectClass -eq "Computer"} -Properties Name, ProtectedFromAccidentalDeletion | Where-Object {$_.Name -match "SQL"; } | Select Name, ProtectedFromAccidentalDeletion | Format-Table -Autosize;The output will look something like below...

Name ProtectedFromAccidentalDeletion ---- ------------------------------- SQLSERVER1 False SQLSERVER2 False SQLSERVER3 False

-

Move a MySQL / TokuDB database?

I’ve been having a look at TokuDB recently and I’m quite excited aboutsome of its claims. But everything comes with its limitations! If you search Google for “move tokudb database” You’ll be presented with a big page of NO! Aside from moving the entire data directory the advice here is use mysqldump or change to another storage engine, i.e. MyISAM, before moving the database files.

-

MySQL Database Maintenance Stored Procedure Update

This is just a quick update of a stored procedure to assist with MySQL Database Maintenance. I originally posted this back in 2012.

-

TokyuDB live query reports

I’ve just started to have a peek at TokuDB for MySQL today. I’m running a few statements to turn some existing MyISAM tables over to the TokuDB storage engine. Here’s what you see with a SHOW PROCESSLIST command.

-

MySQL Partitioning & OPTIMIZE TABLE

MySQL table Partitioning can be used in various way to improve performance. I wanted to get some idea of how this would affect database maintenance operations like OPTIMIZE TABLE.

-

Failover Cluster Report post on Scripting Guys

I’ve got another post on the Scripting Guys Blog. This post uses powershell to report on a Windows Failover Cluster. I think it’s pretty cool, let me know what you think!.

-

Matching windows cluster node ID to physical server

Here's the Powershell version of matching the node number in Cluster.Log files to the actual cluster node names. Essentially log messages like this one...

ERROR_CLUSTER_GROUP_MOVING(5908)' because of ''Cluster Disk' is owned by node 1, not 2.'

Inspired by this post using cluster.exe.

Get-ClusterNode -Cluster ClusterName | SELECT Name, Id, State | Format-Table -Autosize;

Output will look something like below...

Name Id State ---- -- ----- clusternode1 00000000-0000-0000-0000-000000000001 Up clusternode2 00000000-0000-0000-0000-000000000002 Up

-

What does disk maintenance mode actually do to a Failover Cluster?

In Failover Cluster Manager if you perform the following sequence of actions…

-

[Warning] User entry 'username'@'hostname' has an empty plugin value. The user will be ignored and no one can login with this user anymore.

After upgrading an instance of MySQL to 5.7 I was unable to login and had several of the following entries in the error log.

-

ERROR Failed to open the relay log '7' (relay_log_pos 0)

I received the following error when I

upgradedmodified a slave from MySQL 5.6.14 to MariaDB 5.5.33 and executing “START SLAVE;”. -

List AD Groups Setup on SQL Server

Here's a quick powershell snippet to display the Windows groups setup as logins on SQL Server.

Import-Module SQLPS -DisableNameChecking -ErrorAction Ignore; Import-Module ActiveDirectory -DisableNameChecking -ErrorAction Ignore; $sql_server = "sql_instance"; $srv = New-Object Microsoft.SqlServer.Management.Smo.Server $sql_server; $srv.Logins | Where-Object {$_.LoginType -eq "WindowsGroup";};Output will be similar to below.

Name Login Type Created ---- ---------- ------- NT SERVICE\ClusSvc WindowsGroup 13/10/2013 11:17 NT SERVICE\MSSQLSERVER WindowsGroup 13/10/2013 12:17 NT SERVICE\SQLSERVERAGENT WindowsGroup 13/10/2013 12:17 Domain\Group 1 WindowsGroup 04/06/2013 12:29 Domain\Group 2 WindowsGroup 02/04/2013 11:25 Domain\Group 3 WindowsGroup 02/04/2013 12:22

-

Native table 'performance_schema'.'threads' has the wrong structure

After upgrading a MySQL slave from 5.5 to 5.6.14 I attempted to execute the following query…

-

SQL Server Partitioning for Paupers

Last year I posted about a pauper’s partitioning technique I used with SQL Server to solve some data purging issues. In a similar vein I recently found SQL Server partitioning without Enterprise Edition that looks like the answer to an issue in our systems. We have large amounts of data but simply can’t justify the cost of SQL Server Enterprise. All is not lost. Time to roll-up our TSQL sleeves!

-

Getting started with Query Notifications with SQL Server 2008 R2

I’ve been experimenting with Query Notifications in SQL Server 2008 R2. This looks like a really cool way of implementing real-time features into your applications without constantly battering your database with select statements. Instead we can request a notification for when the data has changed for a query. Here’s a quick demo of the feature.

-

Inspect those indexes

Here’s a few queries I often use to review the indexes in our SQL Server systems.

-

Who's in those AD Windows Groups setup on SQL Server?

I wanted to be able to check which windows users had been placed in the Windows AD Groups we use to control access to SQL Server. Here’s what I came up with to make checking this easy;

-

SSIS: Failed to load XML from package file

I recently came across this error in one of our SSIS packages.

-

Tsql Mirroring Failover

-

Use sys.sql_modules not INFORMATION_SCHEMA.ROUTINES

Have you ever tried using INFORMATION_SCHEMA.ROUTINES in SQL Server for searching for all procs referencing a specific object? Perhaps something like this;

-

Twitter lists with Tweet-SQL 3.6

Here’s a quick post about viewing Twitter lists with Tweet-SQL 3.6. First you can view your lists by running;

-

The job failed. Unable to determine if the owner (sa) of job has server access.

If you have failing SQL Agent jobs with the following error;

-

Moving user databases the TSQL way

Here’s a few queries I built to construct the commands needed to move user database files in SQL Server 2208 R2. The queries are based on the procedure outlined here. As with all scripts on the Internet take care with this. It worked fine for my circumstances but may not in yours. Be careful and take backups!

-

Find out members of a database role in SQL Server

Just a quick post with a query to identify members of a specific database role in MSSQL.

-

Powershell: Who is in an Active Directory Group?

This Powershell snippet uses the Get-ADGroupMember to retrieve the names of users in a specific AD group.

Import-Module ActiveDirectory; Get-ADGroupMember -Identity "Group Name" | Select-Object Name | Format-Table -AutoSize;

Output should look something like below;

Name ---- Joe Bloggs John Smith Jane Doe

-

Mirroring SQL Server 2008 R2 Enterprise to Standard

If you attempt to mirror SQL Server 2008 R2 Enterprise to Standard edition, using SSMS, you will receive the following error message;

-

Slave: Got error -1 from storage engine Error_code: 1030

I received this error after copying a MyISAM database from one server to another.

-

Copy SSIS Packages between SQL Server Instances

I’m in the process of setting up a mirrored server and I’m looking to make fail-over as painless as possible.

-

Archiving a Twitter users timeline with Tweet-SQL 3.6

Here’s a quick update of a post I made way back in 2008 to archive a users timeline. This script will allow you download the tweets from any unprotected twitter account. Let’s get started!

-

Tweet-SQL 3.6 Released!

The long overdue update for Tweet-SQL is here! This is a major update for version 1.1 of the Twitter API.

-

Using Hints in SQL Server

Query hints are bad right? I confess to using them on odd occasions but only when other attempts to find a solution have failed. Microsoft emphasize this themselves;

-

DBT2: ImportError: libR.so: cannot open shared object file

Yet another error I encounter whilst running DBT2 tests. This time it was a problem with generating the final reports.

-

DBT2: stmt ERROR: 1406 Data too long for column 'out_w_city' at row 1

Another issue with the DBT2 benchmarking suite.

-

Get a list of all your database files

I needed a list of all the database files on a SQL Server instance. Here’s how to get this easily.

-

Troubleshooting the sh mysql_load_db.sh script for dbt2

I’ve been working with dbt2 benchmarking tool recently and had a few issues I thought I’d detail here for anyone else having the same issues. I was attempting to load the dbt2 database with test data;

-

Installing the DBT2 Benchmark Tool on Linux

Just recording the process I used to install the DBT2 bench-marking tool. I used OpenSuSE 12.1 for this but should work on many distributions.

-

Functions & sargable queries

Using functions improperly in your where clauses always prevents index usage right? I’ve been reviewing some queries generated by linq and I’ve found out this isn’t always the case. A quick demo…

-

[ERROR] Native table 'performance_schema'.'table name' has the wrong structure

After I upgraded an instance to MySQL 5.7 I noted the following errors in the log;

-

Are you checking for possible integer overflow?

I realized I wasn’t! We run a couple of systems that I know stick a mass of records through on a daily basis. Better start doing this then or I might end up doing a whoopsie!

-

Compare AD Group Memberships with Powershell

Here’s a quick Powershell script I knocked up to help me check AD Group Memberships between two user accounts. Just set the $user1 and $user2 variables and you’re good to go.

-

Failed to configure Node and Disk Majority quorum with '[Disk Group Name]'.

I was changing the drive used as a disk quorum today and received the following error at the end of the wizard in Failover Cluster Manager;

-

Powershell to get Windows Startup & Shutdown times

Here's a quick Powershell snippet to get the startup and shutdown times for a windows system after a specific point.

Get-EventLog -LogName System -ComputerName myHost -After 12/03/2013 -Source "Microsoft-Windows-Kernel-General" | Where-Object { $_.EventId -eq 12 -or $_.EventId -eq 13; } | Select-Object EventId, TimeGenerated, UserName, Source | Sort-Object TimeGenerated | Format-Table -Autosize;Id 12 indicates a startup event while 13 a shutdown event.

EventID TimeGenerated UserName Source ------- ------------- -------- ------ 13 12/03/2013 07:41:58 Microsoft-Windows-Kernel-General 12 12/03/2013 07:44:06 NT AUTHORITY\SYSTEM Microsoft-Windows-Kernel-General

-

Migrate users between MySQL Servers with pt-show-grants

If you use MySQL but don’t use Percona Toolkit you’re really missing a trick. It contains a whole host of useful tools including pt-show-grants which I use to migrate users between servers easily.

-

Compiling Hadoop example MaxTemperature.java

I’m working through some of the examples in this Hadoop book. I’m a little rusty on compiling java programs and had a little trouble with this one so I’m documenting it here for anyone else how might be having issues.

-

Hadoop VersionInfo Issue on OpenSuSE 12

I was getting the following error when attempting to run hadoop version.

-

Preparing the NCDC Weather Data for Hadoop

I’m exploring Hadoop with the book Hadoop: The Definitive Guide. Appendix A shows how to download NCDC Weather data from S3 and put it into Hadoop. I didn’t want to download from S3 or load the entire dataset so here’s what I did instead.

-

Getting started with Hadoop

I wanted to get started playing about with Hadoop but had trouble installing Cloudera’s CDH. As I only wanted to have a working version of Hadoop for development purposes I decided to skip using Cloudera’s distribution and go direct to the Apache Hadoop release. Here’s the process I went through to set it up on OpenSuSE 12.1.

-

Tech plans for 2013

Just a quick post on my technical plans for 2013…

-

MySQL Cursor bug?

I came across this little funny with MySQL cursors today. This may be documented somewhere in the manual but I couldn’t find it. Thought I’d post it here for anyone else experiencing cursor issues with MySQL. First, a quick illustration of the issue…

-

Monitor /tmp usage on Linux

Just a quick post to show how to monitor usage of /tmp on a Linux system. Setup a cron job as follows…

-

Table-Valued Parameters need an alias!

I’m always the one to say RTFM but this one stumped me for a while. I had problems using a Table-Valued Parameter in a Stored Procedure today.

-

Linux Tip: Output error messages to syslog from cron

I wanted to find a way of running a script in cron and output the exit code, and error message, to syslog if it failed. Here’s what I came up with…

-

Linux Tip: Add datetime stamp to bash history

I like to know what’s happening on my Linux servers. The output of the history command doesn’t include a datetime stamp by default. To rectify this open the global profile….

-

Monitoring Windows with NSClient++

I’ve been playing with Nagios recently and have been using NSClient++ to monitor Windows machines. In some places the documentation wasn’t too great so I thought I’d outline some service checks I’ve got working here. The service definitions here would normally be defined on the Nagios host not on the Windows box itself.

-

Nagios timeperiod for Bank Holidays

The Nagios configuration files come with a timeperiod example for US Public holidays but not for good old Blighty! Obviously that won’t do so here’s one for England & Wales (sorry Scotland!).

-

MySQL Group By

Many people are caught out by MySQL’s implementation of GROUP BY. By default MySQL does not require that you GROUP BY all non-aggregated columns. For example the following is an illegal query in SQL Server (as well as rather nonsensical);

-

pmp-check-mysql-deleted-files plugin issue

I’ve been busy setting up the Percona Nagios MySQL Plugins but ran into an issue with the pmp-check-mysql-deleted-files plugin;

-

Quick Linux Tip: rpm query & xclip

I’m working on a script to do some basic auditing of my Linux servers. One thing I want to record is the install details from an rpm query. The following command will provide us with some basic details of the rpms installed and ordered by date.

-

Check the SQL Server Service Account Can Write the SPN

I don’t have access, like many DBAs, to the inner bowels of Active Directory. While I’m more than happy for it to stay this way I still want to check that certain things have been setup correctly and haven’t been “cleaned-up” by a security

nazifocused domain administrator. -

MySQL Database Maintenance Stored Procedure

UPDATED VERSION: MySQL Database Maintenance Stored Procedure

-

Purging data & Partitioning for Paupers

Several months ago at work we started having some terrible problems with some jobs that purge old data from our system. These jobs were put into place before my time, and while fine at the time, were now causing us some big problems. Purging data would take hours and cause horrendous blocking while they were going on.

-

Check Mirroring Status with Powershell

Here's a simple Powershell snippet to check the mirroring status on your SQL Server instances.

# Load SMO extension [System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.Smo") | Out-Null; -

Get-ServerErrors Powershell Function

Here’s a little Powershell function I’m using to check the Event Logs and SQL Server Error Logs in one easy swoop;

-

Working with multiple computers in Bash

Working with multiple computers in Powershell is absurdly easy using the Get-Content cmdlet to read computer names from a text file. It’s as easy as this…

-

List AD Organizational Units with Powershell

Here's a quick Powershell one-liner to list all the Organizational Units, or OUs, in your Active Directory domain. Firstly you'll probably need to load the ActiveDirectory module. This can be done at the Powershell prompt with the below command;

Import-Module ActiveDirectory;

Then we can use the Get-ADOrganizationalUnit cmdlet to retrieve a list of OUs.

Get-ADOrganizationalUnit -Filter * | Select-Object -Property Name | Format-Table -AutoSize;

This will display a list looking something like below;

Name ---- Domain Controllers Microsoft Exchange Security Groups Security Groups Domain Servers Domain Workstations Domain Guest Accounts Printers Management Virtual Desktops IT Service Accounts Users Computers Production Servers SQL Servers Web Servers

-

Beware the Powershell -Contains operator

Many of us tend to jump quickly, into a new programming or scripting language, applying knowledge we’ve learned elsewhere to the current task at hand. Broadly speaking this works well but these always a little gotcha to trip you up!

-

Monitoring SSRS Subscriptions with Powershell

We don’t use SSRSmuch at my workplace but its usage is slowly creeping up. I realised that none of us are keeping an eye on the few subscriptions we have set-up. So I decided to do something about that.

-

Using Powershell to increment the computers date

Here’s a little snippet of Powershell code I used recently to test some TSQL that runs according to a two week schedule.

-

TSQL to generate date lookup table data

I needed to generate a range of data about dates for a lookup table. There’s an elegant solution using a recursive cte that does the job;

-

MySQL Storage engine benchmarking

Here’s a stored procedure I use to perform some simple benchmarking of inserts for MySQL. It takes three parameters; p_table_type which should be set to the storage engine you wish to benchmark i.e. ‘MyISAM’, ‘InnoDB’, p_inserts ; set this to the number of inserts to perform. p_autocommit ; set the autocommit variable (relevant to InnoDB only) to 0 or 1.

-

Testing a Failover Cluster with Powershell

Just a quick Powershell snippet that I'm going to use to run validation tests on one of my staging Failover Clusters during OOH.

The script below will take some services offline, run the validation tests, before bringing the appropriate cluster groups back online. The report will be saved using the date as the name. To use this you will need to set $cluster appropriately and perhaps customize the cluster groups that are brought offline & online.

Import-Module FailoverClusters;

-

Domain user password expiry with Powershell

I needed to figure out a method for producing alerts when a domain account is approaching the password reset date. Here it is in a few lines of Powershell…

-

The difference statistics can make

A few days ago a developer came to me with a query that was executing slowly on a staging server. On this server it took 16 long seconds to execute while on other servers it took about 1 second.

-

Checking Disk alignment with Powershell

Disk alignment has been well discussed on the web and the methods to check this always seem to use wmic or DISKPART. I've always loathed wmi so here's a few lines of Powershell that achieves the same thing;

$sqlserver = "sqlinstance"; # Get disk partitions $partitions = Get-WmiObject -ComputerName $sqlserver -Class Win32_DiskPartition; $partitions | Select-Object -Property DeviceId, Name, Description, BootPartition, PrimaryPartition, Index, Size, BlockSize, StartingOffset | Format-Table -AutoSize;

This will display something looking like below;

DeviceId Name Description BootPartition PrimaryPartition Index Size BlockSize StartingOffset -------- ---- ----------- ------------- ---------------- ----- ---- --------- -------------- Disk #2, Partition #0 Disk #2, Partition #0 Installable File System False True 0 1099523162112 512 1048576 Disk #3, Partition #0 Disk #3, Partition #0 Installable File System False True 0 536878252032 512 1048576 Disk #4, Partition #0 Disk #4, Partition #0 Installable File System False True 0 1082130432 512 65536 Disk #5, Partition #0 Disk #5, Partition #0 Installable File System False True 0 1082130432 512 65536 Disk #1, Partition #0 Disk #1, Partition #0 Installable File System False True 0 107376279552 512 1048576 Disk #0, Partition #0 Disk #0, Partition #0 Installable File System True True 0 104857600 512 1048576 Disk #0, Partition #1 Disk #0, Partition #1 Installable File System False True 1 81684070400 512 105906176 Disk #0, Partition #2 Disk #0, Partition #2 Installable File System False True 2 104857600000 512 81789976576 Disk #0, Partition #3 Disk #0, Partition #3 Installable File System False True 3 104857600000 512 186647576576

-

Audit VLFs on your SQL Server

I’ve been reading a bit about VLFs (Virtual Log Files) this week. I’ve found quite a few interesting links, especially this one, informing us that there’s such a thing as too few or too many VLFs.

[System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) Out-Null; -

Tweet-SQL 3.5 Released

Tweet-SQL 3.5 is now available. This release features better methods for using multiple Twitter accounts and https support. Download your copy over on tweet-sql.com.

-

Fun with the Get-Hotfix cmdlet

With the Get-Hotfix cmdlet you can query the list of hotfixes that have been applied to computers.

Get-Hotfix | Format-Table -AutoSize;

This will display the list of hotfixes installed on the local computer.

Source Description HotFixID InstalledBy InstalledOn ------ ----------- -------- ----------- ----------- SO0590 Update 982861 NT AUTHORITY\SYSTEM 07/07/2011 00:00:00 SO0590 Update KB958830 host\Administrator 07/07/2011 00:00:00 SO0590 Update KB958830 host\Administrator SO0590 Update KB971033 host\Administrator SO0590 Update KB2264107 NT AUTHORITY\SYSTEM 06/08/2011 00:00:00 SO0590 Security Update KB2305420 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2393802 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2425227 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2475792 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2476490 NT AUTHORITY\SYSTEM SO0590 Security Update KB2479628 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2479943 host\Administrator 03/10/2011 00:00:00 SO0590 Update KB2484033 host\Administrator 03/10/2011 00:00:00 SO0590 Security Update KB2485376 host\Administrator 03/10/2011 00:00:00 SO0590 Update KB2487426 host\Administrator 03/10/2011 00:00:00 SO0590 Update KB2488113 NT AUTHORITY\SYSTEM 06/09/2011 00:00:00 SO0590 Security Update KB2491683 NT AUTHORITY\SYSTEM 06/09/2011 00:00:00 SO0590 Update KB2492386 NT AUTHORITY\SYSTEM 06/09/2011 00:00:00

-

Fun with the Get-EventLog cmdlet

The Get-EventLog cmdlet is great for working with the Windows Event Logs on local and remote computers. It includes lots of parameters that make life much easier than using the Event Viewer GUI.

To list the available logs on the local computer just execute;

Get-EventLog -List | Format-Table -AutoSize;

Max(K) Retain OverflowAction Entries Log ------ ------ -------------- ------- --- 20,480 0 OverwriteAsNeeded 50,916 Application 20,480 0 OverwriteAsNeeded 0 HardwareEvents 512 7 OverwriteOlder 0 Internet Explorer 20,480 0 OverwriteAsNeeded 0 Key Management Service 8,192 0 OverwriteAsNeeded 52 Media Center 128 0 OverwriteAsNeeded 3 OAlerts Security 20,480 0 OverwriteAsNeeded 56,258 System 15,360 0 OverwriteAsNeeded 2,889 Windows PowerShellIt's great for drilling down into the Event Logs to get the information you're most interested in. For example, this line of Powershell gets all Errors in the Application Event Log, from the last 24 hours.

Get-EventLog -LogName Application -EntryType Error -After $(Get-Date).AddHours(-24) | Format-Table -AutoSize;

This snippet can pull out all the Event Log errors from any combination of servers and logs within the last 24 hours. Great for those morning checks!

# Set servers to query $servers = @("server1", "server2", "server2"); # Set event logs to query $logs = @("System", "Application"); -

TRIGGER_NESTLEVEL TSQL Function

This function is used to determine the current nest level or number of triggers that fired the current one. This could be used to prevent triggers from firing when fired by others. Here’s an example that does that; we have two tables with triggers, that fire AFTER INSERT, and insert into the other table. The use of TRIGGER_NESTLEVEL allows us to control the flow gracefully.

-

The CONNECTIONPROPERTY TSQL Function

The CONNECTIONPROPERTY function can be used to obtain information about the current connection. The information available is similar to the sys.dm_exec_connections system view.

-

Encryption with TSQL

SQL Server has a bunch of encryption functionality at its disposal. The EncryptByPassphrase allows us to quickly encrypt data using a password. This function uses the Triple DES algorithm to protect data from prying eyes. To encrypt a section of text we supply a password and the text to the function;

-

Check for bad SQL Server login passwords

The PWDCOMPARE function is really handy for further securing your SQL Servers by checking for a range of blank or common passwords. If you google for common password list you’ll probably recognise several if you’ve been working in IT for any reasonable amount of time. Fortunately you can use this function, in conjunction with the sys.sql_logins view, to check an instance for bad passwords.

-

SSIS in a Failover Cluster: Failed to retrieve data for this request

I got this error when attempting to expand the msdb ssis package store on my recently built test cluster;

-

Statistical System functions in TSQL

TSQL has a bunch of statistical system functions that can be used to return information about the system. This includes details about the number of connection attempts, cpu time, and total reads and writes and more.

-

The NTILE TSQL Function

The NTILE is used to assigned records into the desired number of groups. NTILE assigns a number to each record indicating the group it belongs to. The number of records in each group will be the same if possible, otherwise some groups will have less than the others.

-

Identify the Active Cluster Node with Powershell

I wanted to find a way of programatically identifying the active node of a SQL Server Cluster. I found this post that demonstrated how to do it with TSQL. As I love Powershell so much here’s another method to do it;

[System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) out-null; -

The GROUPING_ID TSQL Function

The GROUPING_ID function computes the level of grouping in a resultset. It can be used in the SELECT, HAVING or ORDER BY clauses when used along with GROUP BY. The expression used in this function must match what has been used in the GROUP BY clause.

-

Failover All Cluster Resources With Powershell

Here's a simple example showing how to manage the failover process with Powershell, making sure all resources are running on one node. First, execute the below command to show which node owns the resources.

Get-ClusterGroup -Cluster ClusterName | Format-Table -AutoSize;

Name OwnerNode State ---- --------- ----- SoStagSQL20Dtc Node1 Online SQL Server Node1 Online Cluster Group Node1 Online Available Storage Node1 Online

In this example all services are currently running on Node1. It's a simple Powershell one-liner to failover everything to another node. The following example assumes a 2 node cluster and all services will be failed over to the other node;

Get-ClusterNode -Cluster ClusterName -Name Node1 | Get-ClusterGroup | Move-ClusterGroup | Format-Table -Autosize;

All services are now running on Node2

Name OwnerNode State ---- --------- ----- SoStagSQL20Dtc Node2 Online SQL Server Node2 Online Cluster Group Node2 Online Available Storage Node2 Online

-

The GROUPING TSQL Function

You can use the GROUPING function to indicate if a column in a resultset has been aggregated or not. A value of 1 will be returned if the result is aggregated, otherwise 0 is returned. It is best used to identify the additional rows returned when a query uses the ROLLUP, CUBE or GROUPING_SETS clauses.

-

Cluster network 'SAN1' is partitioned

You may encounter this error, about your storage networks, when setting up your Windows 2008 Failover Cluster. The following errors, Event ID 1129, will show up in Cluster Events…

-

The APP_NAME TSQL Function

The APP_NAME function returns the name of the application for the current database connection if the application has set it. Run the following in SSMS;

-

Assign a user role for all databases

I’m moving the backup jobs we run onto specific users and need to assign the db_backupoperator role to the user for each database. Very tedious to do in SSMS so

here’s a quick script I knocked up. -

Powershell Primary Key & Clustered Index Check

It’s considered a bad practice Not using Primary Keys and Clustered Indexes here’s a Powershell script that can make checking a database for this very easy. Just set the $server to the sql instance you want to check and $database as appropriate.

[System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) Out-Null; -

Synchronize Mysql slave tables with mk-table-sync

I’ve been meaning to check out Maatkit for a while now. Today I had a reason to as one of our MySQL slaves got out of sync with the master. I’d heard about mk-table-sync, a tool that synchronizes tables, so I thought I’d give it a shot.

-

Counting objects between databases

I’ve been looking at using Powershell in our release process to automate various things. I’ve used it to compare table data between databases and I’m now thinking of using it to validate our schema upgrades. I want to be easily alerted to any missing tables, columns, stored procedures and other objects.

[System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) Out-Null; -

List Sql Server Processes with Powershell

I was looking for a way to grab a list of processes running inside Sql Server but wasn’t having much luck. Essentially I wanted something like the Get-Process cmdlet but for Sql Server. Shortly after tweeting for help I stumbled across the EnumProcesses SMO method.

[System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) Out-Null; [System.Reflection.Assembly]::LoadWithPartialName(“Microsoft.SqlServer.Smo”) Out-Null; -

The Poor Mans data compare with Powershell

Each new cmdlet I discover makes me fall in love with Powershell a little bit more. A while ago I discovered the Compare-Object cmdlet. The examples given in the documentation demonstrate how to compare computer processes and text files but I was interested to see if this would work with a dataset. So I tried it.

-

Check SQL Agent Job Owners with Powershell